RBE3002 is the last class in the Unified Robotics series at WPI, focusing on SLAM using a differential drive Turtlebot3 Burger robot. The main goals of the class were to create ROS nodes to perform path planning and driving, while interacting with the drivetrain and LiDAR sensor of the Turtlebot.

The robot would drive around an unknown field creating a map of its entirety without colliding with walls, until deciding it mapped it all, and then drive back to where it started. After the mapping is completed, the robot is "kidnapped," and placed in a random place on the field, and told to drive to a different specified location without hitting any walls, which requires both a strong map and strong localization techniques.

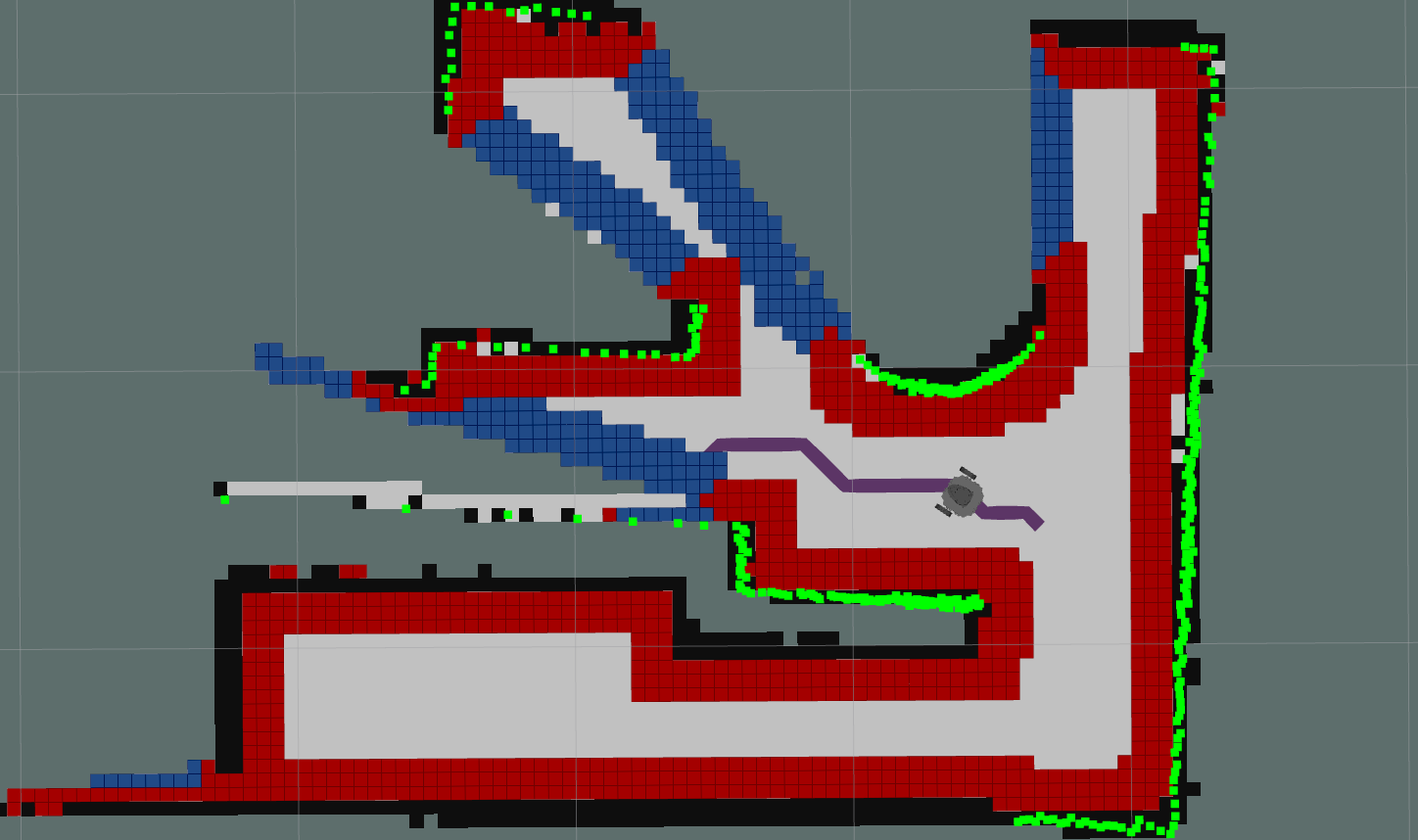

For mapping the field, the robot needed to create a map of its surroundings. My team used the ROS Gmapping package with the data from the onboard LiDAR, a 2D occupancy grid for the surrounding areas was created. From that, the walls and unknown regions (frontiers) were padded to create the C-space (red in the photo on the right). We also built an A* pathfinding algorithm to create an optimal path from the robot to a cell, which was used to navigate to unexplored frontiers and other specified locations. One clear heuristic for the A* algorithm used the Euclidean distance from the robot to the desired location, but we also implemented additional cost functions to discourage paths from going through the dangerous C-space, and to center the path towards the center of the open area (seen in the photo on the right, where the purple path points to blue unexplored frontier cells, and not only does not go through the C-space, but also stays in the middle of the driving region. These heuristics worked exceptionally well, keeping the robot from colliding with walls and helping accurately create the map of the field.

In addition to the A* and Gmapping functionality, a few features were implemented to improve the performance and speed of the robot. While pure pursuit is a method to create smooth trajectories, we did not know about it at the time of this course. However, we did develop a similar way to smooth the trajectory of the robot, which involved the robot driving to the point on the trajectory about five points ahead, as to smooth out sharp corners and small jogs in the field based on the resolution of the grid. Since the robot would be looking ahead in the path, it ended up smoothing out corners in the path, and resulting in a faster drivetrain since it did not need to stop and rotate when getting to a corner. Also, when driving to a frontier to explore it, the robot would stop short of the end and recalculate its position in order to ensure it did not hit a wall. This was necessary since the robots were unable to update the state of the occupancy grid map in real time, and instead happened after driving to a frontier.

Overall, the robot worked exceptionally well, and was able to drive around the unknown field and map its entirety, in addition to localizing itself with said map after being "kidnapped."