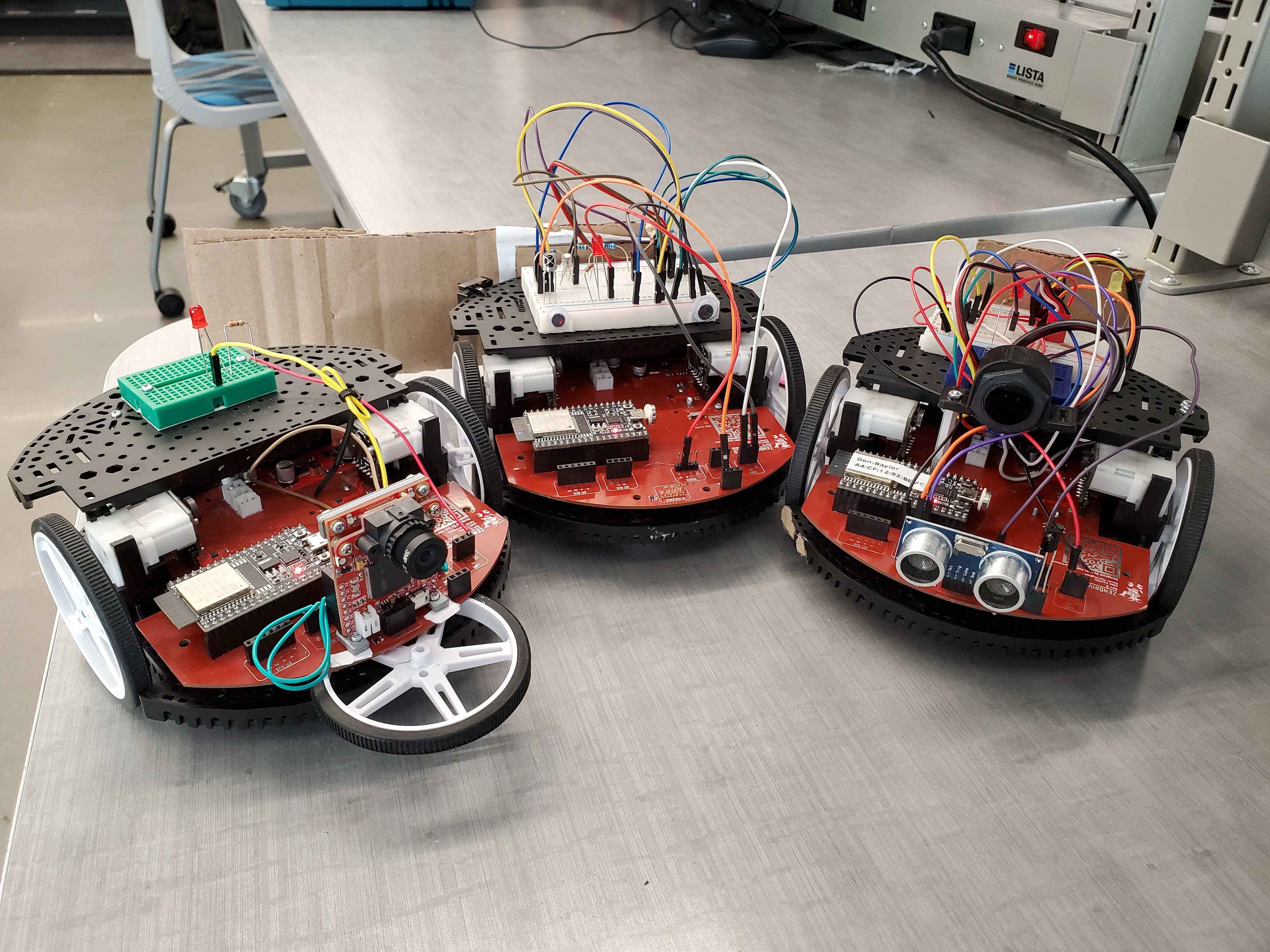

This was the second class in the Unified Robotics Sequence at WPI, and it was very Computer Science heavy (~5k lines of code written). In this class, groups of three students were tasked with constructing three robots to act as characters in the play Romeo and Juliet. These robots effectively, and autonomously, reenacted the balcony scene and a fight scene, necessitating the characters Romeo, Tybalt, Mercutio, Juliet, and Friar Lawrence.

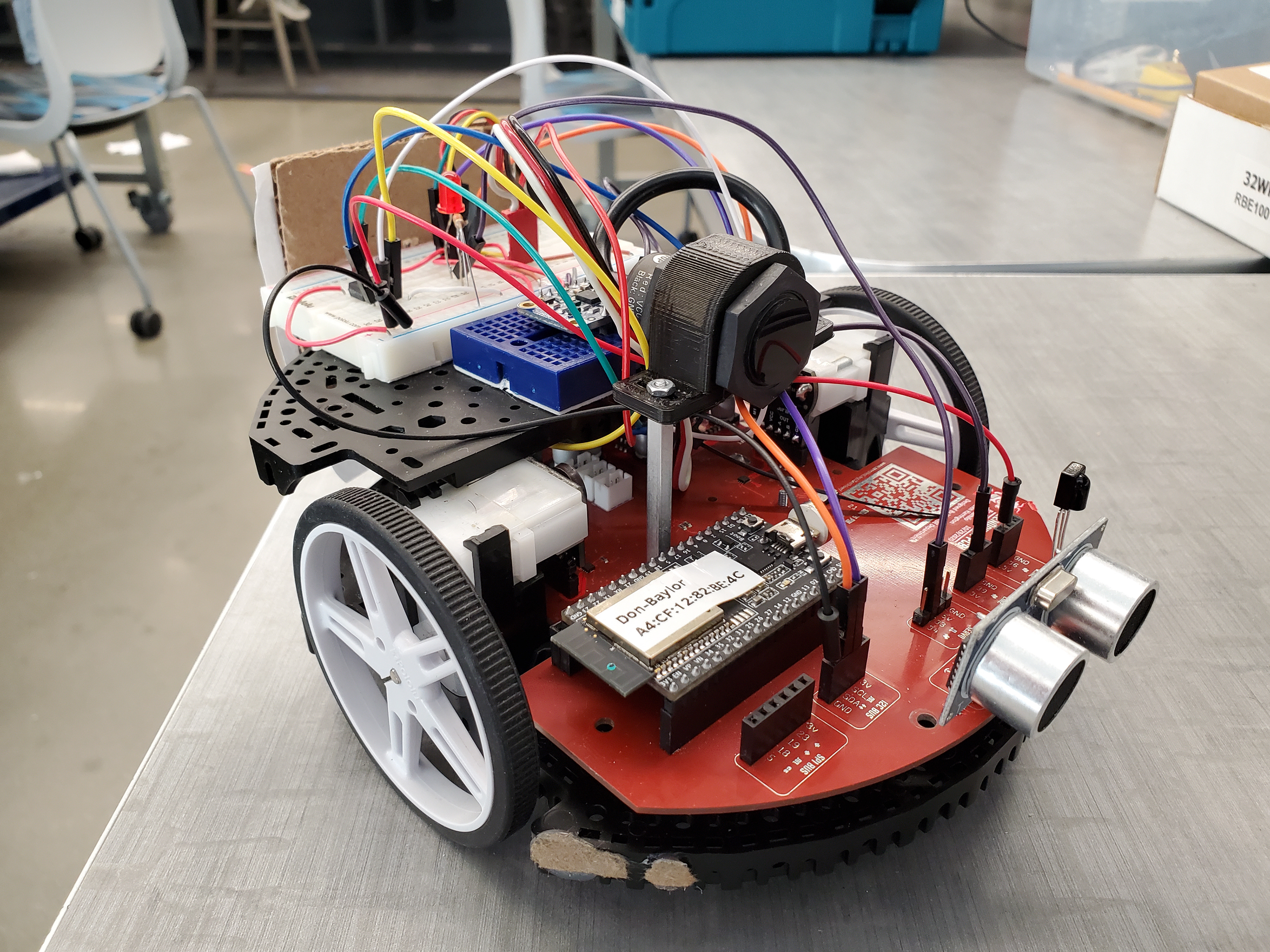

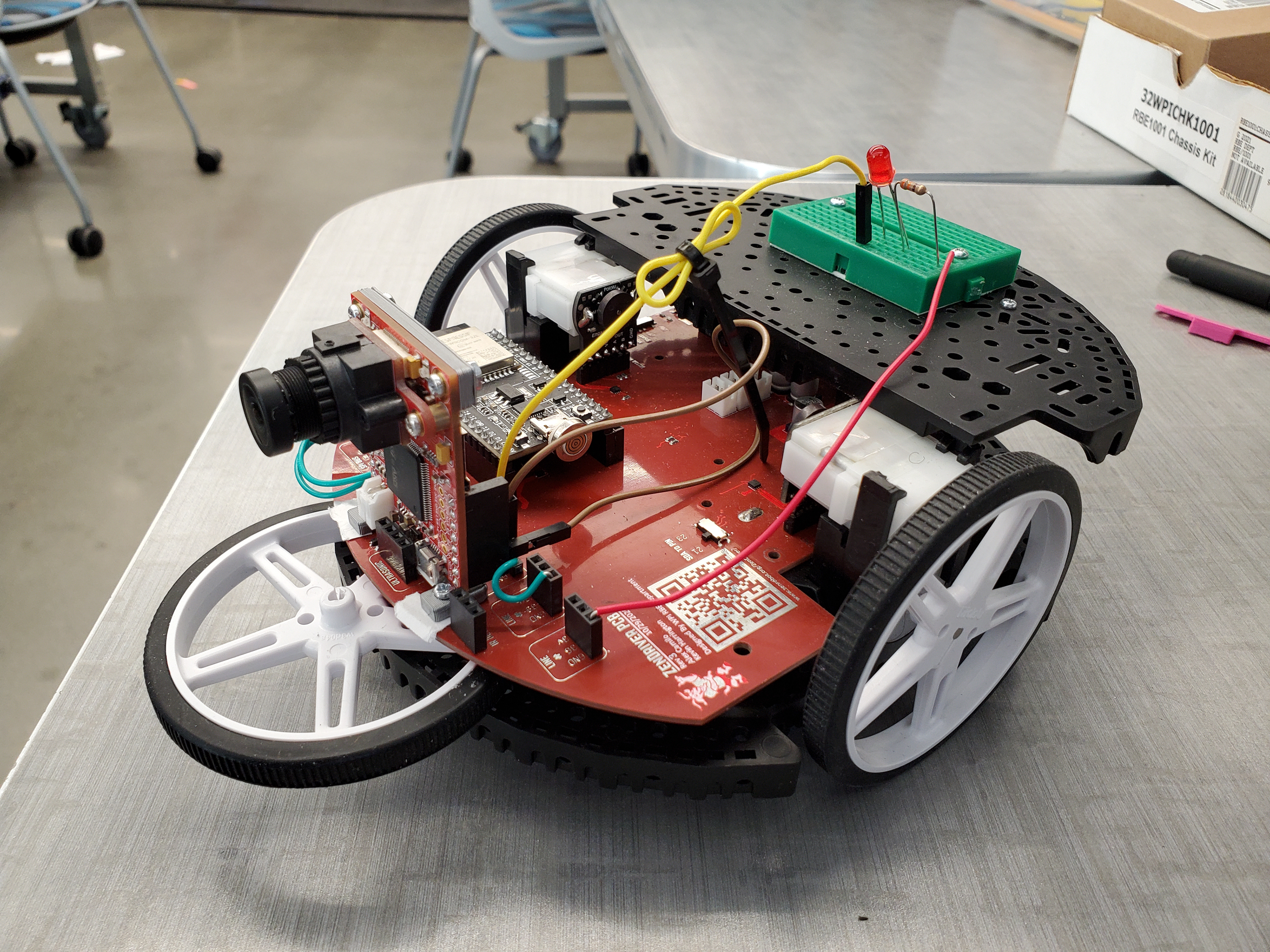

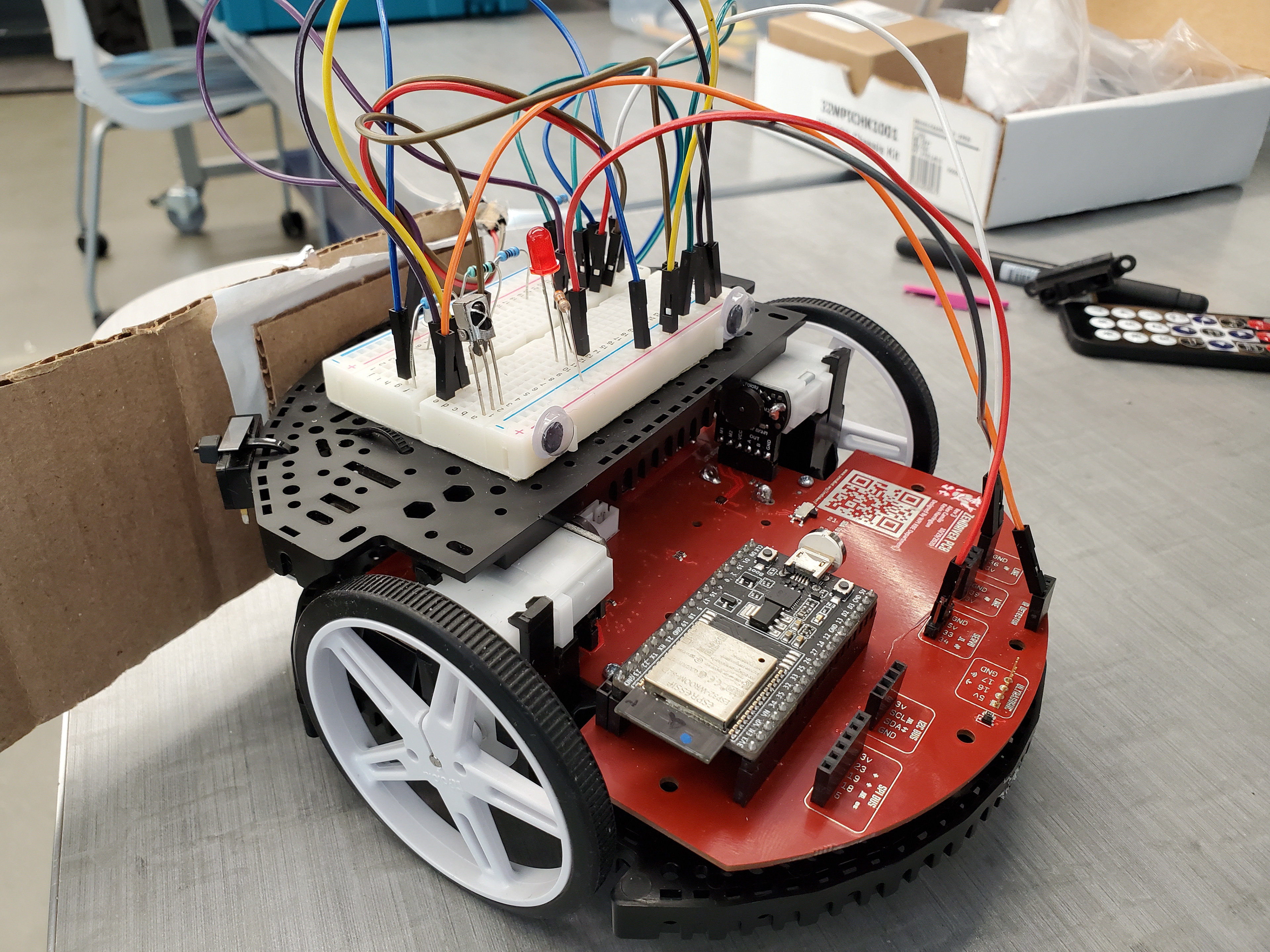

The three robots could only use a specific suite of sensors, so they were split up between the robots to give each of them an optimal sensor combination. For example, we gave the top robot the infrared camera and ultrasonic distance sensor, while the second robot received an OpenMV camera. The OpenMV camera performed onboard machine vision to track the position of QR codes attached to other robots, enabling that robot to follow and maintain a set distance away from a robot with one of these QR codes. The infrared camera and distance sensor could be used in a similar way; the infrared camera only returns the position of an IR signal (from an IR emitter on another robot) which can be combined with the distance reading of the ultrasonic sensor to enable the following of another robot. From there, we could use abstracted code to achieve the same effect with the two different systems. Telling both to go to the "follow" state would make both robots perform the same way, but they would go about it using their respective sensors.

In addition to creating the robots' "vision," we also had to interpret and filter data from the sensors that needed data filtering, which included the ultrasonic sensor, an IMU (inertial measurement unit which had a gyro, accelerometer, and a magnetometer), as well as the wheel encoders for all robots. These sensors outputted data that we could not directly interpret and use. For the ultrasonic sensor, we simply made an averaging filter and threw out values that were not in the range of trusted distances to get pretty good performance. From the IMU, we implemented median, averaging, and complementary filters to attempt to get a pitch reading that we trusted. In the end, the complementary filter worked the best, using both the accelerometer and gyro sensors to get a reading that would not drift or spike.

In addition to the IMU, we also had to code the entire mobility of the robots. They are differential drive robots with two drive wheels and two hidden caster wheels underneath. In order to achieve this, we wrote code to calculate the pose of the robots (x, y, angle), which enabled forward and inverse kinematics. From this, were able to tell the robot to drive to exact coordinates and face an exact angle, or have them navigate using sensors like the OpenMV camera and still know exactly where the robots were in space. In order to get this working reliably, we used a combination of the encoder values from the wheels, as well as the IMU's gyro and accelerometers, to accurately tell the pose of the robot.

Finally, the robots had to communicate. We worked on making an IR protocol for a short time, which did not end up working, and we instead went with WiFi (MQTT) communication. This way, we could keep tabs on all of the robots's states as they went through the scene, and they could talk to each other to perform the scene effectively.

Demo of Romeo following Juliet using the OpenMV camera